Running Multiple Processes in a Single Docker Container

In the world of modern software deployment, Docker has become a de facto standard. Its ability to create isolated, consistent environments has made it indispensable for developers and operations teams alike.

However, running multiple processes within a single Docker container is often frowned upon. This article challenges that notion, exploring how this approach can be both practical and efficient, especially when the processes are closely interconnected and need to work in unison.

It also introduces a Python-based solution called monofy, which provides a simple yet effective way to manage multiple processes within a single Docker container.

The Challenge

Information on how to effectively run multiple processes in a single Docker container is scarce. Most of the content you’ll find online instead simply discourages the idea, advocating for the “one process per container” philosophy.

Docker’s own docs do not discourage the practice as much as some of the community does, and even provides an example script for it. Unfortunately, that script is very basic and explicitly marked as a “naive” solution. It also doens’t explain what part is naive about it, or what the best practices would be.

So we did what any good developer would do: we rolled up our sleeves and took it upon ourselves to find a solution that meets our needs. We identified the following requirements:

- Start multiple processes within the container by single parent process.

- Ensure proper signal handling, forwarding signals like

SIGINTandSIGTERMto child processes. - Forward output (stdout, stderr) to the main process, ensuring unified logging.

- If one child process exits, terminate all other to ensure all processes stop together.

- Wait for all child processes to exit before shutting down the parent, ensuring a clean and predictable shutdown process.

Because Python is our bread and butter, we created a simple solution using Python.

It’s called monofy and is available on

PyPI. Despite it being written in Python, it

can be used with any language or framework.

Feel free to use it in your own projects, or as a starting point for your own implementation.

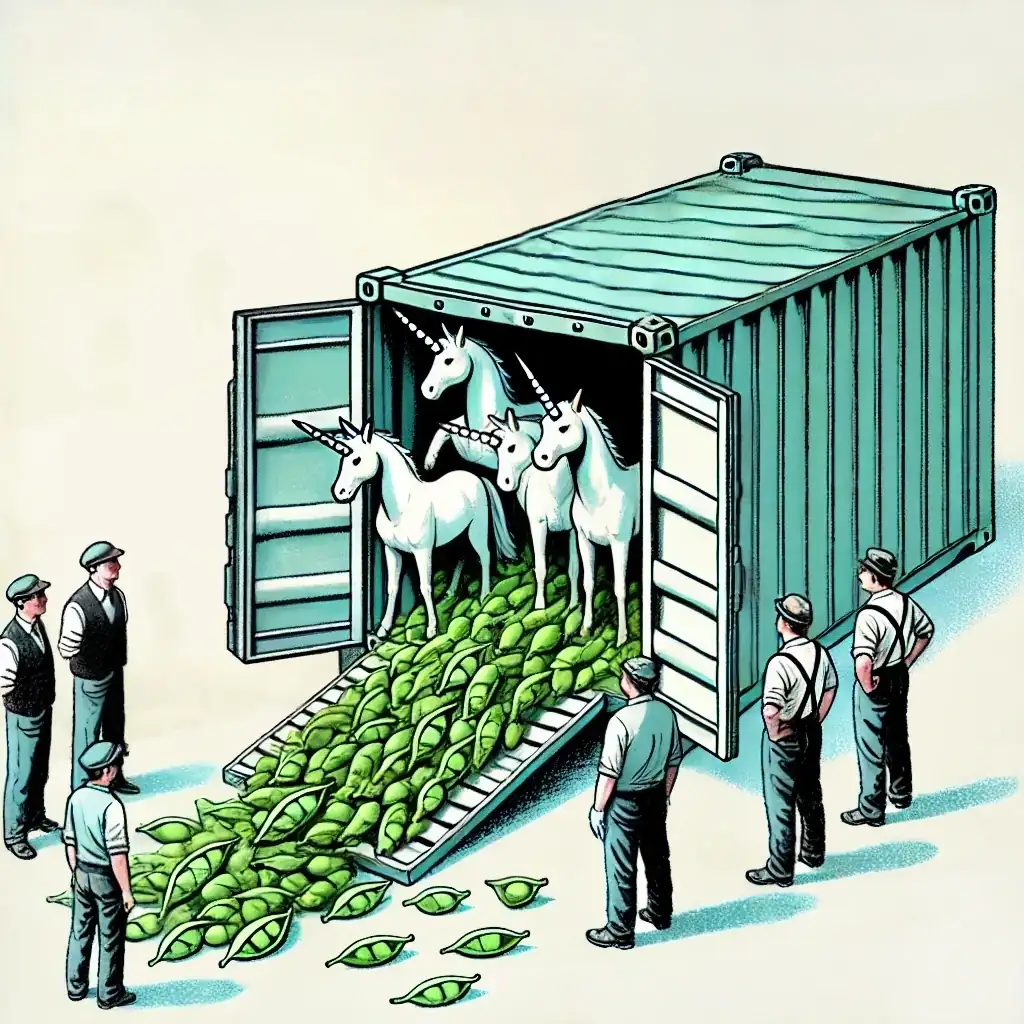

Blasphemy!

Running multiple processes in a single Docker container is often considered a bad practice. The stock response is “one process per container” and “use Docker Compose”. To be fair, this is good advice in many cases. But it’s not the only way to do things. Here’s why we think it’s okay in our case.

Ease of deployment

In our case, the overriding goal, Docker or not, is ease of deployment and maintenance. Bugsink is designed to be self-hosted, and if we can’t get people to deploy it, we’re out of business.

The reason we picked up Docker at all is to simplify deployment for people familiar with Docker but not necessarily with Python. I’d say we can do that without following every Docker convention to the letter.

However you look at it, running multiple processes in a single container is simpler than running multiple containers. You don’t have to worry about networking, volumes, or dependencies between containers. You don’t have to worry about orchestrating multiple containers, or about the overhead of running multiple containers on a single host.

Tools like Docker Compose and Kubernetes might be useful, but there is no doubt they add complexity and overhead.

What is “one thing” anyway?

The second question is what exactly constitutes “one thing”. Let’s see what Docker itself has to say about the matter:

It’s best practice to separate areas of concern by using one service per container. That service may fork into multiple processes (for example, Apache web server starts multiple worker processes). It’s ok to have multiple processes, but to get the most benefit out of Docker, avoid one container being responsible for multiple aspects of your overall application.

That just puts the question back to us: what are the “areas of concern” or “aspects” in our application?

In the Bugsink architecture, we have a Gunicorn server, with a very tightly integrated background process called Snappea. Think of Snappea as a tiny version of Celery, but with a much simpler design and a much smaller scope, and no need for a separate message broker. Instead, it uses a SQLite database as a queue, and gets work fed to it over the filesystem by the Gunicorn server.

This is to say: Gunicorn and Snappea form a single “area of concern”, namely to serve the Bugsink application. It just happens that in the Python ecosystem, it’s hard to do that in a single operating system process. So we split it into two processes. All our tool does is to re-unite them under a single parent.

Finally, and not so seriously: the wrapper script we wrote is called “monofy”. The word “mono” means “one” in Greek, so it’s a “one-ifier. This provides a cunning way to fool the Docker gods into thinking we’re following their advice.

Scalability

The primary argument for “one process per container” is scalability. The idea is that you allow each service to scale independently, adapting to varying loads and ensuring that no single process becomes a bottleneck.

Our approach is somewhat different. We’ve designed the Bugsink architecture to be highly efficient and scalable without the need for complex orchestration tools like Kubernetes.

By just making everything blazingly fast, we’ve minimized latency and overhead, ensuring that the system performs efficiently as a single cohesive unit. Deploying as a single image is part of that strategy.

Ultimately, the database is the bottleneck, not the application server. Therefore, consolidating these processes into a single container doesn’t hinder our overall scalability but simplifies deployment and management.

Existing solutions

One of the very few resources we found on running multiple processes in a single

container, and on defending the practice, was Phusion’s baseimage-docker.

So why didn’t we just use that instead?

-

It’s big. It’s already based on Ubuntu and includes unnecessary components like SSH and cron. We’d rather have freedom to choose our base image.

-

Whether it is well-maintained is unclear. The last update was four months ago, and the Docker Hub description still incorrectly references Ubuntu 16.04. In any case, they recommend running

apt-get update && apt-get upgradeyourself to keep it somewhat up-to-date. -

We’d rather not have

runitin the first place. More stuff to think about, more stuff to go wrong. We’d rather have a simple, Python-based solution. Usingrunitalso makes it harder to run one-off commands in the container.

Conclusion

Running multiple processes in a single Docker container isn’t just feasible — it’s sometimes the best solution.

By focusing on minimizing complexity, and ensuring tight integration between processes, we’ve managed to create a system that is both scalable and maintainable.

While the one-process-per-container philosophy is often beneficial, it’s not a hard and fast rule. In scenarios like ours, where the database is the true bottleneck and processes are tightly coupled, consolidating everything into a single container can simplify deployment and improve performance without sacrificing scalability.

Now that you’re here: Bugsink is a simple, self-hosted error tracking tool that’s API-compatible with Sentry’s SDKs. Check it out