Saving Costs on Sentry

Tracking errors is essential for understanding and improving your application in production. Tools like Sentry make this easy – but once your error volume grows, the costs can escalate quickly:

-

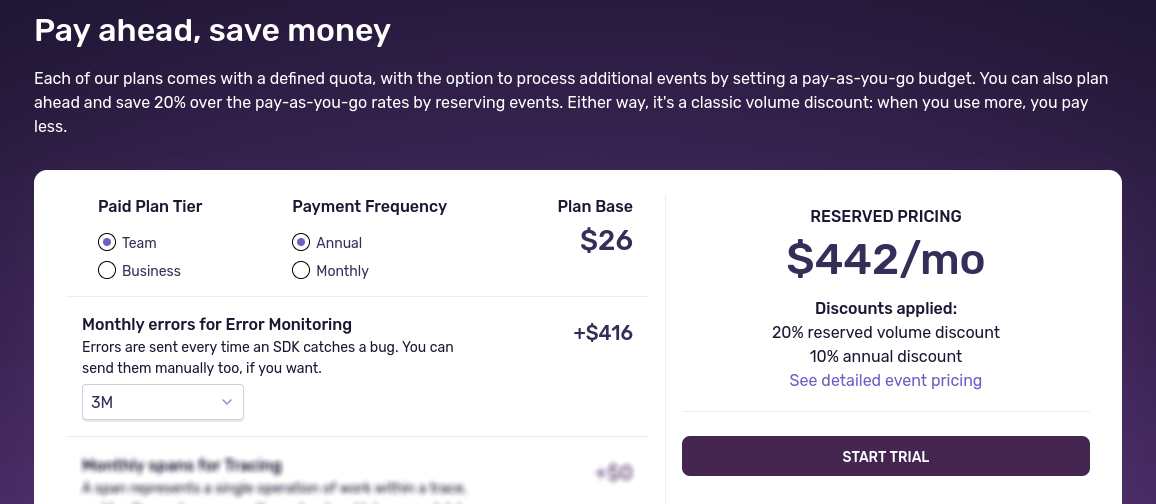

At 100K errors per day – just one per second on average – you’re already paying about $442/month with Sentry’s current pricing and assuming the best-case (annual billing, team plan, no pay-as-you-go).

-

At 1M errors per day, this jumps to approximately $3,637/month.

Sentry offers robust features, but at this scale, pricing becomes a real barrier. Exploring alternatives can significantly reduce your costs without sacrificing monitoring quality.

It’s Okay to Track Many Errors

But shouldn’t good software produce fewer errors?

Sure – and in a perfect world, it would. But real-world systems fail in unexpected ways. During a crisis – a broken deploy, overloaded service, or external outage – errors can spike dramatically. And in those moments, you want as much information as possible.

You shouldn’t have to decide, in the middle of an incident, which errors are worth tracking and which ones you can afford to drop.

That’s where pay-per-error pricing becomes a problem. It forces trade-offs when you least want them. Will your tracker silently drop events? Will it keep going and leave you with a massive bill? You’re not always sure – and that uncertainty adds stress at exactly the wrong time.

A predictable, scalable error tracker removes that pressure. It gives you the space to collect, diagnose, and resolve. After you’ve fixed the issue, you can always clean up and move on.

The Cost of Sentry

If you’re reading this, you probably don’t need me to tell you that tracking high volumes of errors through Sentry can get expensive fast. As your application’s error volume grows, costs scale directly with usage (see Sentry’s pricing):

- At 100K errors per day (about one per second), you’re looking at roughly $442/month.

- At 1M errors per day, this jumps to approximately $3,637/month.

- At multiple millions per day, you’re quickly into “contact sales” territory.

And that’s in the favorable scenario:

- You’re on the Team plan, which lacks certain advanced features you might eventually need.

- You pay upfront, committing significant funds before knowing your actual needs.

- You pay yearly, locking yourself in even if your error volumes fluctuate unpredictably.

In less favorable scenarios—opting for monthly payments (+20%), choosing the Business plan (+100%), or relying on pay-as-you-go pricing—the cost can quickly spiral even further. This leaves you in a difficult position: either carefully limit what you track (potentially missing critical issues), or accept the unpredictability of your monthly bill.

At these volumes, predictable pricing and scalability become crucial factors—not optional features.

Self-Hosting as a Cost-Effective Alternative

When faced with these rising costs, some teams first consider self-hosting Sentry itself. At first glance, this seems appealing: you keep a familiar tool but gain control over infrastructure costs and scalability. However, this comes with significant complexity. Self-hosted Sentry is notoriously resource-intensive, challenging to set up, and demanding in terms of ongoing maintenance and upgrades—often requiring dedicated engineering effort to keep it running smoothly.

For teams already stretched thin, or those looking for simplicity and predictability, self-hosted Sentry might not be a viable long-term solution (see my earlier experience with self-hosted Sentry). In practice, the overhead can quickly outweigh potential cost savings, leading teams to explore simpler alternatives.

Self-Hosted Alternatives

To mitigate the financial impact of high-volume error tracking, many teams are now exploring simpler self-hosted alternatives like Bugsink. By running their own error tracking infrastructure, teams significantly reduce monthly expenses and gain clear control over scalability, privacy, and budgeting.

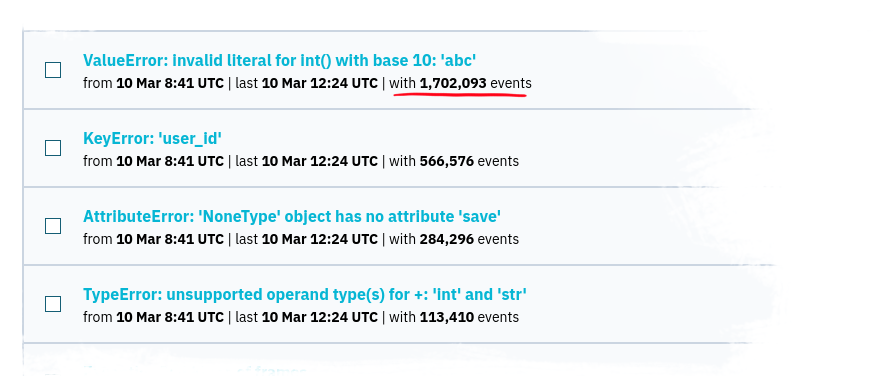

Unlike self-hosted Sentry, Bugsink was specifically designed to be lightweight and straightforward to maintain. As an example, one of our customers handles more than a million errors per day with Bugsink, hosting it on Google Cloud Platform (GCP) at infrastructure costs of less than $100 per month. This approach offers a clear financial advantage: instead of facing unpredictable event-based billing, teams can now rely on stable, predictable infrastructure costs.

The specific advantage Bugsink has over self-hosted Sentry is operational simplicity. While running your own Sentry instance can demand significant ongoing maintenance and expertise, a typical Bugsink setup – even at high event volumes – usually requires just a few hours per month or less. This ease-of-use means even smaller teams or those without dedicated infrastructure engineers can comfortably manage their error tracking. It makes both your costs and your maintenance predictable.

Why not Bugsink?

Bugsink isn’t a drop-in replacement for Sentry, and it doesn’t try to be.

It focuses strictly on error tracking. There’s no performance tracing, no session replays, no dashboards for latency percentiles or database timings. If you need APM features, or if you want an all-in-one observability platform, Bugsink won’t cover that.

What it does offer is a complete, reliable, and scalable solution for capturing, grouping, and triaging errors. It’s full-featured in that domain, not a toy or a partial implementation. You get event grouping, triage history, detailed payloads, search/filtering, and the ability to retain large volumes of data – without paying per event.

So if you need advanced analytics and tracing, keep using Sentry or a similar tool – maybe just not for everything. But if you just want to track and fix errors without getting a surprise bill, Bugsink does that job well.

Configuration and Infrastructure

Initial setup for Bugsink can be accomplished in minutes, and despite its lightweight nature, such a setup can easily handle tens of thousands of errors per day. That is, up to 1M errors per month the default setup is perfectly adequate.

However, for high-volume environments, it’s recommended to spend a bit more time on tuning Bugsink’s configuration and infrastructure.

Storage requirements

Perhaps somewhat surprisingly, the biggest infrastructure consideration isn’t CPU or memory—it’s storage.

As noted in the scalability guide, Bugsink can comfortably handle around 2.5 million events per day on modest hardware. But at that rate, you’re dealing with 75 million events per month.

With an average event size of around 50 KB (based on observed data from Python setups), that adds up to 3.75 TB of data per month.

It’s worth checking your actual event sizes before planning, as they may vary. Bugsink supports events up to 1 MB, but this is a hard upper bound—most real-world events will be far smaller.

Now, whether you actually need to store all that data is another question.

Bugsink lets you set a retention cap per project – not in days, but in total number of stored events. Depending on your event volume, that cap translates back to a certain number of days’ worth of data. That mapping isn’t linear (because Bugsink prioritizes more relevant events), but it gives you control. You decide what you want to keep, and Bugsink enforces it without letting your storage run away from you.

So how do you turn all this into a concrete storage plan?

Start by estimating your average event size. If you don’t have exact numbers yet, pick something with a bit of margin – say, 50–100 KB. Then make a similar rough guess for daily volume, again with margin for spikes or unexpected growth.

Next, look at available disk sizes—whatever makes sense in your hosting setup. Don’t forget to leave room for the OS, logs, and other overhead (a few GB at least). Once you’ve picked a disk size you’re comfortable with (both technically and financially), you can work backwards: divide that size by your estimated event size, and you’ll get an approximate retention cap in number of events. From there, you can estimate how many days’ worth of events that will hold.

Keep in mind that eviction is based on individual events, not grouped issues, and the process isn’t perfect yet. There’s some vacuuming and cleanup logic involved, and improvements are in the works—expect a follow-up article soon.

So: if you’re expecting high volumes, it’s worth running your own numbers. Take your estimated daily volume, your typical event size, and your desired retention—and you’ll have a decent approximation of what kind of storage you’ll need.

Oh… and if you’re already saving thousands of dollars per month by self-hosting, it makes sense not to “fly so close to the sun” to shave off a few more dollars. A slightly larger setup can significantly improve stability.

Configuration

For more detail on how Bugsink stores event data and how to scale storage cleanly, see the article Moving Event Data Out of the Database.

By default, Bugsink stores full event payloads directly in the main database. This works well for small or medium setups, but at high volume, it introduces some problems. Over time, the database becomes large and unwieldy—slowing down writes, making queries less efficient, and turning backups into a bottleneck.

The solution is to move event data out of the database. In this setup, the database keeps only metadata and indexes, while the raw event bodies are written to a separate storage location. This could be a dedicated disk path, a mounted volume, or anything else you control.

The result is a more manageable system. Vacuuming and migrations become much faster. Your database remains small, and performance stays predictable even as volume grows. It’s one of the simplest changes you can make to ensure Bugsink stays fast and stable over time.

Testing your setup

At this scale, it’s a good idea to run some tests of your own. The estimates given here are based on one particular setup using SQLite, and your actual storage characteristics may differ—especially if you’re using a different database backend or running Bugsink in a more customized environment.

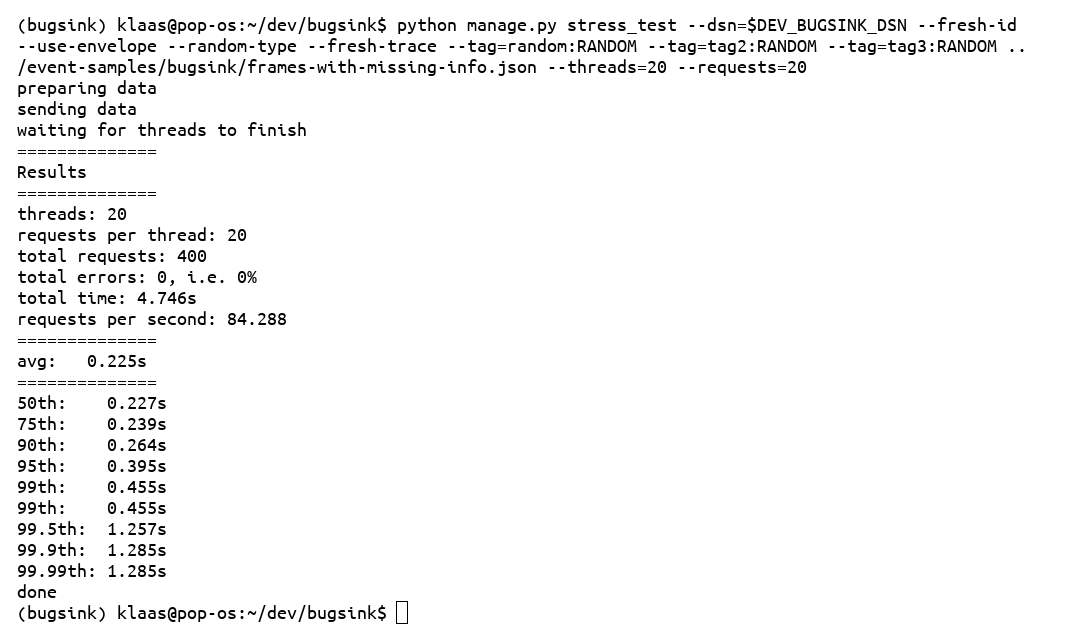

For quick testing, you can use the command stress_test. To see its available options, run:

bugsink-manage stress_test --help

This will flood your setup with realistic test data, giving you a clearer picture of storage usage, throughput, and performance under load. It’s a practical way to validate your assumptions before committing to a production configuration.

Note that, because Bugsink queues events for processing, the results of the stress test are not directly representative of Bugsink’s total capacity. Instead, they give you a sense of how your setup will behave under heavy load. To get a more accurate picture, make sure to take a timing from the moment you sent your first event to the moment your last event was processed.

Make sure to set the quota to something reasonable for your test environment, and keep an eye on disk usage as the test runs. You can always stop the test early if you see unexpected growth or performance issues.

Support Options

Running Bugsink yourself typically requires very little ongoing effort. In most cases, maintenance is minimal—and only under heavy load does it increase, topping out at a few hours per month.

But if you’d rather not think about it—or just want someone on call when things go sideways—enterprise support is available.

Support is optional and offered on a case-by-case basis. Pricing depends on your setup and needs, but even with support included, you’re likely to come out far ahead compared to hosted high-volume plans.

What do you get? Priority access to the developer (me), help with tuning your configuration, assistance with upgrades, and advice on scaling or storage planning. If you hit a wall, I’ll help dig into it. If something’s unclear in the docs, I’ll write it up. If your stack is weird, we’ll figure it out together.

In short, support is meant to make self-hosting Bugsink feel less like running your own infrastructure and more like using a service – just one that happens to live on your own machines.

Interested? Just send me an email and we’ll figure out what makes sense for your setup.

Conclusion

If you’re dealing with high error volumes, hosted tools like Sentry can become a serious expense — especially once you move beyond a few million events per month. Self-hosting is a way to cut costs, but many of the available options come with their own maintenance burden.

Bugsink was built to be the exception: a self-hosted error tracker that’s simple to run, straightforward to scale, and predictable in both cost and upkeep.

It’s not an all-in-one observability suite. But if what you need is reliable error tracking – at any scale, without surprise bills — Bugsink does that job well.

No need to reach out first. Just install it – it takes about 30 seconds – and see how it fits.